在 Kubernetes 的网络模型中,基于官方默认的 CNI 网络插件 Flannel,这种 Overlay Network(覆盖网络)可以轻松的实现 Pod 间网络的互通。当我们把基于 Spring Cloud 的微服务迁移到 K8S 中后,无须任何改动,微服务 Pod 可以通过 Eureka 注册后可以互相轻松访问。除此之外,我们可以通过 Ingress + Ingress Controller ,在每个节点上,把基于 HTTP 80 端口、HTTPS 443 端口的用户请求流量引入到集群服务中。

但是实际使用中,我们出现了以下需求:

办公室网络 和 K8s Pod 网络不通。开发在电脑完成某个微服务模块开发后,希望本地启动后,能注册到 K8S 中开发环境的服务中心进行调试,而不是本地起一堆依赖的服务。

办公室网络 和 K8s Svc 网络不通。在 K8S 中运行的 Mysql、Redis 等,无法通过 Ingress 7 层暴露,开发电脑无法通过客户端工具直接访问;如果我们通过 Service 的 NodePort 模式,会导致维护量工作量巨大。

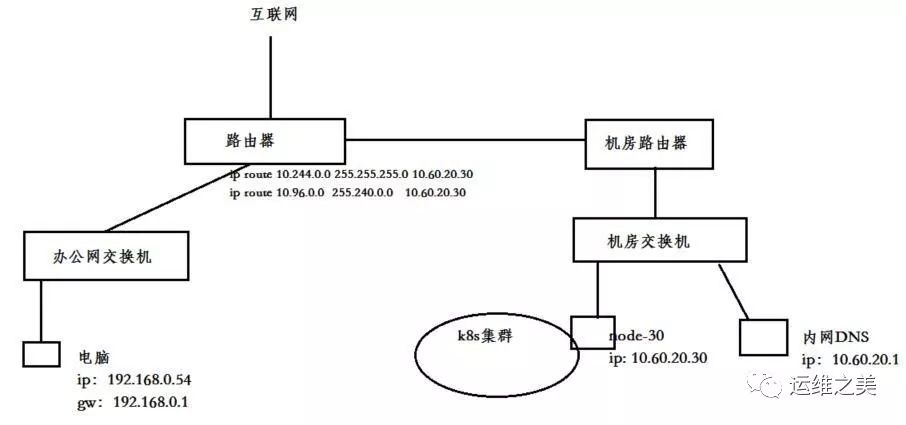

网络互通配置 K8S 集群中新加一台配置不高(2 核 4G)的 Node 节点(node-30)专门做路由转发,连接办公室网络和 K8S 集群 Pod、Svc。

node-30 IP 地址 10.60.20.30

内网 DNS IP 地址 10.60.20.1

Pod 网段 10.244.0.0/24,Svc 网段 10.96.0.0/12

办公 网段 192.168.0.0/22

给 node-30 节点打上污点标签(Taints),不让 K8S 调度 Pod 来占用资源:

1 $ kubectl taint nodes node-30 forward=node-30:NoSchedule

node-30 节点,做 SNAT:

1 2 3 4 5 6 7 8 # 开启转发 $ vim /etc/sysctl.d/k8s.conf net.ipv4.ip_forward = 1 $ sysctl -p # 来自办公室访问pod、service snat $ iptables -t nat -A POSTROUTING -s 192.168.0.0/22 -d 10.244.0.0/24 -j MASQUERADE $ iptables -t nat -A POSTROUTING -s 192.168.0.0/22 -d 10.96.0.0/12 -j MASQUERADE

在办公室的出口路由器上,设置静态路由,将 K8S Pod 和 Service 的网段,路由到 node-30 节点上

1 2 $ ip route 10.244.0.0 255.255.255.0 10.60.20.30 $ ip route 10.96.0.0 255.240.0.0 10.60.20.30

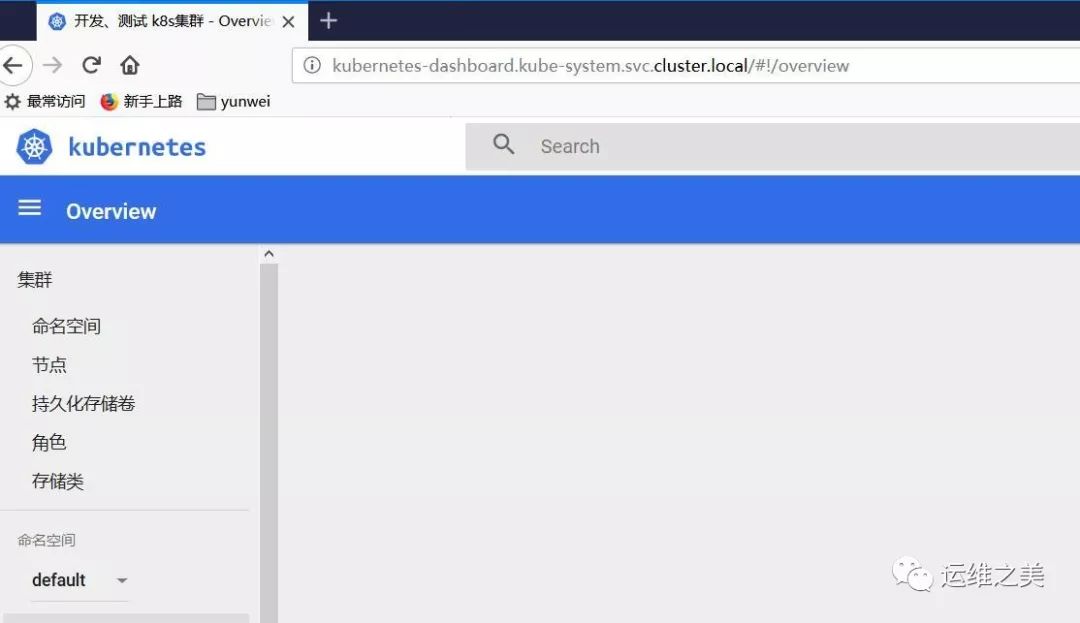

DNS 解析配置 以上步骤操作后,我们就可以在本地电脑通过访问 Pod IP 和 Service IP 去访问服务。但是在 K8S 中,由于 Pod IP 随时都可能在变化,Service IP 也不是开发、测试能轻松获取到的。我们希望内网 DNS 在解析 *.cluster.local, CoreDNS 寻找解析结果。

例如,我们约定将(项目A 、开发环境一 、数据库 MySQL)部署到 ProjectA-dev1 这个 Namespace 下,由于本地到 K8S 集群 Service 网络已经打通,我们在本地电脑使用 MySQL 客户端连接时,只需要填写 mysql.ProjectA-dev1.svc.cluster.local 即可,DNS 查询请求到了内网 DNS 后,走向 CoreDNS,从而解析出 Service IP。

由于内网 DNS 在解析 *.cluster.local,需要访问 CoreDNS 寻找解析结果。这就需要保证网络可达:

方案一, 最简单的做法,我们把内网 DNS 架设在 node-30 这台节点上,那么他肯定访问到 Kube-DNS 10.96.0.10

1 2 $ kubectl get svc -n kube-system |grep kube-dns kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 20d

方案二,由于我们实验场景内网 DNS IP 地址 10.60.20.1 ,并不在 node-30 上,我们需要打通 10.60.20.1 访问 SVC 网段 10.96.0.0/12 即可

1 2 3 4 5 # 内网DNS(IP 10.60.20.1) 添加静态路由 $ route add -net 10.96.0.0/12 gw 10.60.20.30 # node-30(IP 10.60.20.30) 做 SNAT $ iptables -t nat -A POSTROUTING -s 10.60.20.1/32 -d 10.96.0.0/12 -j MASQUERADE

方案三(实验选择),由于我们实验场景内网 DNS IP 10.60.20.1 并不在 node-30 上,我们可以用 nodeSelector 在 node-30 部署一个 Nginx Ingress Controller, 用 4 层暴露出来 CoreDNS 的 TCP/UDP 53 端口。

给 node-30 打上标签:

1 $ kubectl label nodes node-30 node=dns-l4

创建一个 Namespace:

1 $ kubectl create ns dns-l4

在 Namespace dns-l4 下部署 Nginx Ingress Controller,选择节点 node-30,并 Tolerate (容忍)其污点:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 kind: ConfigMap apiVersion: v1 metadata: name: nginx-configuration namespace: dns-l4 labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- kind: ConfigMap apiVersion: v1 metadata: name: tcp-services namespace: dns-l4 labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx data: 53: "kube-system/kube-dns:53" --- kind: ConfigMap apiVersion: v1 metadata: name: udp-services namespace: dns-l4 labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx data: 53: "kube-system/kube-dns:53" --- apiVersion: v1 kind: ServiceAccount metadata: name: nginx-ingress-serviceaccount namespace: dns-l4 labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: nginx-ingress-clusterrole labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - "" resources: - services verbs: - get - list - watch - apiGroups: - "extensions" resources: - ingresses verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - "extensions" resources: - ingresses/status verbs: - update --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: Role metadata: name: nginx-ingress-role namespace: dns-l4 labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx rules: - apiGroups: - "" resources: - configmaps - pods - secrets - namespaces verbs: - get - apiGroups: - "" resources: - configmaps resourceNames: # Defaults to "<election-id>-<ingress-class>" # Here: "<ingress-controller-leader>-<nginx>" # This has to be adapted if you change either parameter # when launching the nginx-ingress-controller. - "ingress-controller-leader-nginx" verbs: - get - update - apiGroups: - "" resources: - configmaps verbs: - create - apiGroups: - "" resources: - endpoints verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: RoleBinding metadata: name: nginx-ingress-role-nisa-binding namespace: dns-l4 labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: nginx-ingress-role subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: dns-l4 --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: nginx-ingress-clusterrole-nisa-binding labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: nginx-ingress-clusterrole subjects: - kind: ServiceAccount name: nginx-ingress-serviceaccount namespace: dns-l4 --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: nginx-ingress-controller namespace: dns-l4 labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx spec: replicas: 1 selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx annotations: prometheus.io/port: "10254" prometheus.io/scrape: "true" spec: nodeSelector: node: dns-l4 hostNetwork: true serviceAccountName: nginx-ingress-serviceaccount tolerations: - key: "node-30" operator: "Exists" effect: "NoSchedule" containers: - name: nginx-ingress-controller image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.21.0 args: - /nginx-ingress-controller - --configmap=$(POD_NAMESPACE)/nginx-configuration - --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services - --udp-services-configmap=$(POD_NAMESPACE)/udp-services - --publish-service=$(POD_NAMESPACE)/ingress-nginx - --annotations-prefix=nginx.ingress.kubernetes.io securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE runAsUser: 33 env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace ports: - name: http containerPort: 80 - name: https containerPort: 443 livenessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1

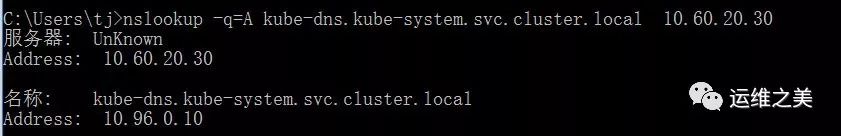

部署完成后,电脑验证,是否生效:

1 $ nslookup -q=A kube-dns.kube-system.svc.cluster.local 10.60.20.30

这里我们用轻量级的 Dnsmasq 来作为内网 DNS 配置案例,将来自内网的 *.cluster.local 解析请求,走 KubeDNS 10.60.20.30。

1 2 3 4 5 $ vim /etc/dnsmasq.conf strict-order listen-address=10.60.20.1 bogus-nxdomain=61.139.2.69 server=/cluster.local/10.60.20.30

完成以上步骤后,我们办公网络与 Kubernetes 网络互通的需求也就实现了,同时我们可以直接用 K8S Service 的域名规则去访问到 K8S 中的服务。

本文转载自:「阳明的博客」,原文:http://t.cn/EJNfDJY,版权归原作者所有。欢迎投稿,投稿邮箱: editor@hi-linux.com 。